Round-robin client-side load-balancing and connection failure detection with pooled-connection-factory configured with multiple netty-connectors

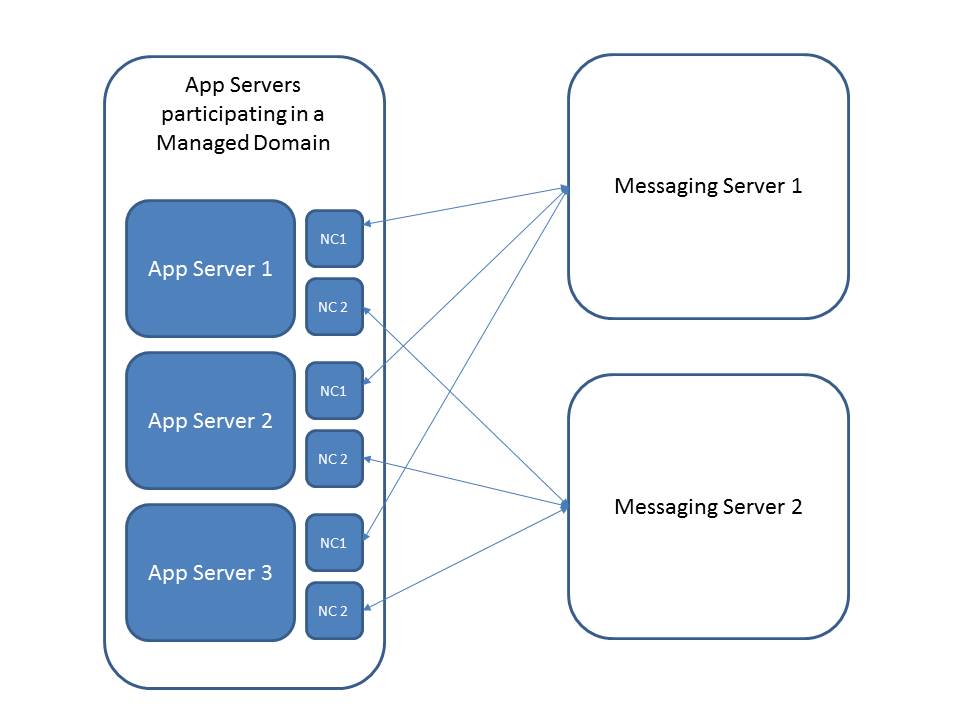

vbchin2 Nov 15, 2013 2:55 PMWe are attempting to use a pooled-connection-factory (HornetQ) configured with two netty-connectors pointing two remote AS 7, each acting as purely JMS messaging servers. The setup (shown quoted below) works but we find that:

- The connection pool appears to be filled with connections from only one netty-connector

- When a series of connections are opened and closed in sequence with only one connection active at a time we find that the created connection always belongs to only one netty-connector. We understand the pool behavior but we were hoping to see connections alternating (round-robin as default behavior) between the two configured netty-connectors

- Assuming a connection was created using a netty-connector (for example netty-remote1). And between this connection being closed and new connection being requested if the messaging server #1 dies, the pooled-connection-factory instead of throwing a error stack trace, keeps trying to get a connection from the dead messaging server, waiting indefinitly.

So coming to the questions:

- Is this a well known or expected behavior ?

- What can be done in addition so that pooled-connection-factory :

- Detects failures and servers only the established connections and not wait indefinitely for the dead server to come back up again

- Creates a connection pool by round-robin'ing between netty-connectors and also serving them up in round-robin fashion when a connection is requested

The following goes into the domain.xml file for the app servers (pasting only the relevant sections):

<connectors>

<netty-connector name="netty" socket-binding="messaging"/>

<netty-connector name="netty-remote1" socket-binding="messaging-remote1"/>

<netty-connector name="netty-remote2" socket-binding="messaging-remote2"/>

<netty-connector name="netty-throughput" socket-binding="messaging-throughput">

<param key="batch-delay" value="50"/>

</netty-connector>

<in-vm-connector name="in-vm" server-id="0"/>

</connectors>

<pooled-connection-factory name="hornetq-ra-remote">

<connectors>

<connector-ref connector-name="netty-remote1"/>

<connector-ref connector-name="netty-remote2"/>

</connectors>

<entries>

<entry name="java:/RemoteJmsXA"/>

</entries>

</pooled-connection-factory>

....

<outbound-socket-binding name="messaging-remote1">

<remote-destination host="192.168.1.2" port="5445"/>

</outbound-socket-binding>

<outbound-socket-binding name="messaging-remote2">

<remote-destination host="192.168.1.3" port="5445"/>

</outbound-socket-binding>

<outbound-socket-binding name="mail-smtp">

<remote-destination host="localhost" port="25"/>

</outbound-socket-binding>

</socket-binding-group>