-

1. Re: Remote Txn Inflow: Synchronizations

tomjenkinson Sep 27, 2011 9:43 AM (in response to dmlloyd)Hi David,

I don't think we reached an actual conclusion on this. The difficulty we have with synchronizations is when one is registered on the subordinate transaction manager we ideally need to be able to register this on the root transaction manager to ensure we get the ordering correct during completion.

For example:

Root TM

XA resource for DB1 used

Synchronization registered to flush SQL to DB1 during beforeCompletion

Txn flows to subordinate TM

XA resourec for DB1 used again

Synchronization registered to flush SQL to DB1 during beforeCompletion

Now during transaction completion if you don't have network aware synchronizations the sequence could be:

1. beforeCompletion sync on root TM flushes SQL to DB1

2. XA resource for DB1 prepares

3. before completion sync on sub TM flushes SQL to DB1 resulting in an error

To be able to work around this we need some mechanism for synchronizations registered on the subordinate TM to be called before the transaction completes which for performance reasons would probably mean we want to extend the TM somehow to support registering these with the root TM, though this would possibly mean that the JTA would become transport aware. Instead, we could use eager interposition and always register a "proxy synchronization" for each subordinate TM so that when the root TM runs through the list of synchronizations it will call back to each subordinate TM to do the beforeCompletion events, even if the subordinate TM does not have any synchronizations to do (apart from possibly to cascade these down).

Tom

PS It's really part of the other thread but we will possibly need a similar mechanism to register subordinate transaction managers as XAResources of the root TM in order to cascade recovery calls, I am still checking this out though.

-

2. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 27, 2011 10:46 AM (in response to tomjenkinson)I was thinking that the root node could simply register a Synchronization (and perhaps also an interposed Synchronization) with its local TM resources. This allows it to direct the remote clients through these phases. The question would be how to inflow these requests into the subordinate nodes (and their subordinate nodes, etc.).

-

3. Re: Remote Txn Inflow: Synchronizations

tomjenkinson Sep 28, 2011 10:42 AM (in response to dmlloyd)Hi David,

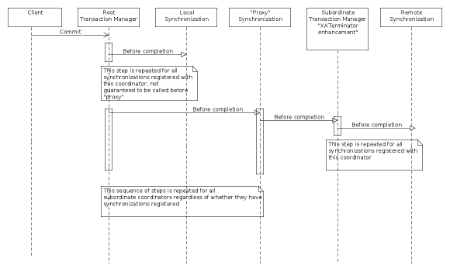

Hopefully this image:

a. Renders OK in SBS :s

b. Explains what I was thinking

It looks like we would need at least two additional functions to XATerminator, in the diagram referred to as "XA Terminator enhancement":

a. A before completion method

b. An after completion method

We have the hooks in SubordinateAtomicAction to provide the before completion method, we would need to add some more hooks to split out afterCompletion as separate to the normal transaction completion routines.

In this picture, I would expect the transport to register a "proxy synchronization" for each subordinate transaction manager that the transaction flows to using the standard Transaction::registerSynchronization mechanism. This proxy would need to be implemented by the (in this case remoting) transport and know how to invoke the remote (new) beforeCompletion/afterCompletion methods on our "enhanced" XATerminator class.

Does that sound reasonable?

Note, I am also thinking that a similar method will need to be employed to ship the Transaction related functions around, i.e. the transport will be responsible for registering an XAResource with the transaction which is responsible for performing transport layer shipping of these messages to the subordinate transaction manager.

The main issue I am still getting my head around is recovery of this system and in particular a way of altering the XID so that local transaction managers can create branches for their XAResources. JCA does not permit this so it still needs a think·

Tom

-

4. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 29, 2011 9:51 AM (in response to tomjenkinson)Tom Jenkinson wrote:

Hi David,

It looks like we would need at least two additional functions to XATerminator, in the diagram referred to as "XA Terminator enhancement":

a. A before completion method

b. An after completion method

Just a quick note here that we can use other classes than XATerminator; there is no need for this particular code (i.e. the EJB server transaction inflow code) to be loosely coupled from the transaction manager, so we should implement the most direct and most correct solution, which probably means just bypassing XATerminator and using the APIs consumed by it. In fact we are already planning on doing so because EJB invocation isn't done with "Work" which is presently the way that tasks are executed via JBossXATerminator instances.

But yeah, adding these methods (and a protocol message to convey them) is what I mean by "a way to inflow these requests". The question is, do we support interposed sync as well, which (as far as I understand) would mean two phases of each completion method (one to fire off non-interposed and one to fire off interposed)?

Tom Jenkinson wrote:

In this picture, I would expect the transport to register a "proxy synchronization" for each subordinate transaction manager that the transaction flows to using the standard Transaction::registerSynchronization mechanism. This proxy would need to be implemented by the (in this case remoting) transport and know how to invoke the remote (new) beforeCompletion/afterCompletion methods on our "enhanced" XATerminator class.

Does that sound reasonable?

Yeah that should be fine, just let me know what the method hooks will be. I'm also fine with using SubordinationManager directly, or providing an alternative extended version of com.arjuna.ats.internal.jta.transaction.arjunacore.jca.XATerminatorImple, or whatever is easiest for you guys. In particular, at a protocol level it does us no good to have all the execptions mapped to XAException because we'll just have to decode it again and send it over the wire; it just costs an extra, useless transaction wrapping.

Tom Jenkinson wrote:

Note, I am also thinking that a similar method will need to be employed to ship the Transaction related functions around, i.e. the transport will be responsible for registering an XAResource with the transaction which is responsible for performing transport layer shipping of these messages to the subordinate transaction manager.

Yeah the current mechanism involves getting an XID how you describe (via the client registering itself as an XAResource) and attaching the resultant XID to all requests in that transaction; the server-side interceptor will be responsible for mapping the XID to a Transaction to resume for the duration of the request. I think we probably should use the same SubordinationManager-based scheme for looking up the transaction though.

Tom Jenkinson wrote:

The main issue I am still getting my head around is recovery of this system and in particular a way of altering the XID so that local transaction managers can create branches for their XAResources. JCA does not permit this so it still needs a think·

Tom

Yeah this is the #1 issue. I think the only real option is a new XID format.

Since the node relationship is hierarchical, it makes sense to me that the bqual should also be hierarchical. We could use a single integer value to represent the branch relative to a particular node, and keep a local mapping of value to subnode identification. This would be enough information to recover, as long as the node connections are all active.

Using fixed-length integers, 64 bytes limits things considerably. Even if you didn't need any bytes for recording existing information that gives you at most 64 levels of 256 resources per level.

You could however use integer packing to make more room; in such a scheme, the bqual bits which identify the node would still be an integer sequence but would work like this:

- Values 0000-0111 (0-7): resources 0-7, length is 4 bits

- Values 1000 0000-1011 1111 (0x80-0xBF): resources 8-71, length is 8 bits

- Values 1100 0000 0000-1101 1111 1111 (0xC00-0xDFF): resources 72-583, length is 12 bits

- etc.

You can tell how many bits to consume by the number of leading high-order bits. Such a scheme can be expanded indefinitely, and supports both many levels with few resources and few levels with many resources.

I assume that as part of recovery, we will have to somehow recreate all of our XAResources? Or does the TM serialize them or something?

-

5. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 29, 2011 10:54 AM (in response to dmlloyd)BTW, it looks like SubordinateTransaction already has doBeforeCompletion() on it, and that is made available on com.arjuna.ats.internal.jta.resources.spi.XATerminatorExtensions. But that doesn't answer the interposed synch question...

-

6. Re: Remote Txn Inflow: Synchronizations

jhalliday Sep 29, 2011 12:55 PM (in response to dmlloyd)David

We need to be really careful discussing interposed syncs, because the term is overloaded and hence ambiguous.

In the context of JTA 1.1, TransactionSynchronizationRegistry.registerInterposedSynchronization has specific partial ordering guarantees. However, as the JTA does not explicitly cover distributed tx semantics, those ordering guarantees are relative to the other Synchronizations in the same JVM only. We may or may not wish to offer wider guarantees or config options according to how useful they are and how easy they are to implement.

The term 'interposition' also has meaning in distributed tx: a subordinate placed between the root coordinator and eventual child resource or Synchronization to form a tree structure. There are several ways of implementing that, including: Synchronization objects may only be registered with their local node and are driven as part of that local node's beforeCompletion phase. That phase may or may not itself be interposed to the corresponding phase in the root coordinator. Alternatively, the local Synchronization may be wrapped in a remote transport aware proxy and registered with the root (or an intermediate parent if the tree is multi level) coordinator. In that case the beforeCompletion phase in the child node is a no-op, the local Synchronization list being empty. Naturally all three solutions display subtly different semantics and not so subtly different performance characteristics.

The final piece of background you need before we continue on to implementation choices is this: the transaction engine underpinning the JTA is not directly aware of the distinction between JTA 'interposed' and regular Synchronizations. It does not maintain separate lists for them, nor process them in separate phases. The necessary ordering guarantees are achieved by sorting a single list of heterogeneous Synchronizations elements using, amongst other things, their 'interposed' attribute. This impacts how easy it is to expose separate lifecycle phases so the subordinate tx's lifecyle can be driven in a more fine grained manner. Specifically, it's easy to expose beforeCompletion and have it run all the Syncs, but considerably harder to expose separate method for running regular and JTA-interposed syncs.

So, the options are:

Wrap the local subordinate tx object supplied by TS with an object that intercepts the registerSynchronization calls and handles them itself, e.g. by proxying them individually or as a group to the parent coordinator. Work needed on the TS side: none i.e. you won't be held up waiting for us. IIRC various AS component already do something like this for a local JVM case where they need ordering guarantees between their own Syncs that exceed what can be done with JTA semantics.

Register Syncs with the local subordinate and register a single Sync in the parent, using the impl thereof to pass the beforeCompletion call down to the subordinate coordinator. Work needed on the TS side: none for beforeCompletion as it's already exposed. Some to expose afterCompletion and allow some way for commit|rollback to indicate afterCompletion processing should be deferred until an explicit call for it is made, as they currently bundle it in automatically and must continue to do so in the default JCA case.

Allow JTA 1.1. 'interposition' ordering guarantees to span multiple nodes: Do it using the first method above, except you either register two Syncs proxies (one regular, one 'interposed') in the parent, or you register every child Sync in the remote parent using its own individual proxy, using a type appropriate wrapper to preserve ordering. Work needed on the TS side: none. Do it using method two above: lots, as the TS core would need to be refactored to support handing multiple sync phases separately before appropriate methods could be exposed for you to call. Don't hold your breath.

The joker in the pack is of course the failure semantics. In JTA all Synchronizations are volatile (another overloaded term. 'in-memory only' rather than 'memory fence aware' in this context). They are not logged to disk and hence afterCompletion calls won't run in the event of a crash. Where everything is in one JVM that is relatively easy to reason about. Where a crash may affect only one of the JVMs in an interposed hierarchy, then you need to start worrying about detecting and cleaning up orphaned afterCompletions that may never get called. A typical afterCompletion call is 'release connection back to pool', so leaking them is going to suck big time.

-

7. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 29, 2011 1:37 PM (in response to jhalliday)Jonathan Halliday wrote:

David

We need to be really careful discussing interposed syncs, because the term is overloaded and hence ambiguous.

Noted; I'll be sure to be specific.

Jonathan Halliday wrote:

In the context of JTA 1.1, TransactionSynchronizationRegistry.registerInterposedSynchronization has specific partial ordering guarantees. However, as the JTA does not explicitly cover distributed tx semantics, those ordering guarantees are relative to the other Synchronizations in the same JVM only. We may or may not wish to offer wider guarantees or config options according to how useful they are and how easy they are to implement.

I am content with moving forward with this interpretation (ordering within same JVM only). If it proves to be too limited, we can change the behavior in a future version of the protocol.

Jonathan Halliday wrote:

The term 'interposition' also has meaning in distributed tx: a subordinate placed between the root coordinator and eventual child resource or Synchronization to form a tree structure. There are several ways of implementing that, including: Synchronization objects may only be registered with their local node and are driven as part of that local node's beforeCompletion phase. That phase may or may not itself be interposed to the corresponding phase in the root coordinator. Alternatively, the local Synchronization may be wrapped in a remote transport aware proxy and registered with the root (or an intermediate parent if the tree is multi level) coordinator. In that case the beforeCompletion phase in the child node is a no-op, the local Synchronization list being empty. Naturally all three solutions display subtly different semantics and not so subtly different performance characteristics.

The final piece of background you need before we continue on to implementation choices is this: the transaction engine underpinning the JTA is not directly aware of the distinction between JTA 'interposed' and regular Synchronizations. It does not maintain separate lists for them, nor process them in separate phases. The necessary ordering guarantees are achieved by sorting a single list of heterogeneous Synchronizations elements using, amongst other things, their 'interposed' attribute. This impacts how easy it is to expose separate lifecycle phases so the subordinate tx's lifecyle can be driven in a more fine grained manner. Specifically, it's easy to expose beforeCompletion and have it run all the Syncs, but considerably harder to expose separate method for running regular and JTA-interposed syncs.

So, the options are:

Wrap the local subordinate tx object supplied by TS with an object that intercepts the registerSynchronization calls and handles them itself, e.g. by proxying them individually or as a group to the parent coordinator. Work needed on the TS side: none i.e. you won't be held up waiting for us. IIRC various AS component already do something like this for a local JVM case where they need ordering guarantees between their own Syncs that exceed what can be done with JTA semantics.

Register Syncs with the local subordinate and register a single Sync in the parent, using the impl thereof to pass the beforeCompletion call down to the subordinate coordinator. Work needed on the TS side: none for beforeCompletion as it's already exposed. Some to expose afterCompletion and allow some way for commit|rollback to indicate afterCompletion processing should be deferred until an explicit call for it is made, as they currently bundle it in automatically and must continue to do so in the default JCA case.

Allow JTA 1.1. 'interposition' ordering guarantees to span multiple nodes: Do it using the first method above, except you either register two Syncs proxies (one regular, one 'interposed') in the parent, or you register every child Sync in the remote parent using its own individual proxy, using a type appropriate wrapper to preserve ordering. Work needed on the TS side: none. Do it using method two above: lots, as the TS core would need to be refactored to support handing multiple sync phases separately before appropriate methods could be exposed for you to call. Don't hold your breath.

I like solution #2 for now (single sync in the parent), for speed and simplicity (it is more or less what I have already planned on doing); adding functionality for propagating calls up the chain can add a lot of complexity (thus risk given the short timeframe) for us. If later on we discover we need two phases, then moving to #1 later on should be not be a problem, though it could add significant latency.

The only thing I'd be concerned about is the extra work that you'd have to do for exposing this hook, but it shouldn't block us as long as it appears sometime before the EAP final release (we can just mark the source with comments at the appropriate location; we should be able to test most functionality without it).

Jonathan Halliday wrote:

The joker in the pack is of course the failure semantics. In JTA all Synchronizations are volatile (another overloaded term. 'in-memory only' rather than 'memory fence aware' in this context). They are not logged to disk and hence afterCompletion calls won't run in the event of a crash. Where everything is in one JVM that is relatively easy to reason about. Where a crash may affect only one of the JVMs in an interposed hierarchy, then you need to start worrying about detecting and cleaning up orphaned afterCompletions that may never get called. A typical afterCompletion call is 'release connection back to pool', so leaking them is going to suck big time.

For the purposes of our protocol (from the client angle), the "resource" we expose would always be associated with a Synchronization, so when we start recovery processing on the crashed-and-restored node, whatever mechanism we use to reconstitute the XAResource(s) could also be used to restore the Synchronizations. However, that mechanism is not yet clear to me; are XAResources or their producers somehow serialized by the TM or is there some other API that I haven't run across yet?

-

8. Re: Remote Txn Inflow: Synchronizations

tomjenkinson Sep 29, 2011 2:10 PM (in response to dmlloyd)David Lloyd wrote:

Jonathan Halliday wrote:

Register Syncs with the local subordinate and register a single Sync in the parent, using the impl thereof to pass the beforeCompletion call down to the subordinate coordinator. Work needed on the TS side: none for beforeCompletion as it's already exposed. Some to expose afterCompletion and allow some way for commit|rollback to indicate afterCompletion processing should be deferred until an explicit call for it is made, as they currently bundle it in automatically and must continue to do so in the default JCA case.

I like solution #2 for now (single sync in the parent), for speed and simplicity (it is more or less what I have already planned on doing); adding functionality for propagating calls up the chain can add a lot of complexity (thus risk given the short timeframe) for us. If later on we discover we need two phases, then moving to #1 later on should be not be a problem, though it could add significant latency.

The only thing I'd be concerned about is the extra work that you'd have to do for exposing this hook, but it shouldn't block us as long as it appears sometime before the EAP final release (we can just mark the source with comments at the appropriate location; we should be able to test most functionality without it).

This is what I was attempting to describe above. That said, the limitation with this approach is clear, in the case where there are no synchronizations registered on the subordinate TM, we will still have to call each subordinate TM with a beforeCompletion message as the root TM will not be aware of which subordinates do/don't have sync's registered, a perfomance penalty.

-

9. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 29, 2011 3:58 PM (in response to tomjenkinson)Tom Jenkinson wrote:

David Lloyd wrote:

Jonathan Halliday wrote:

Register Syncs with the local subordinate and register a single Sync in the parent, using the impl thereof to pass the beforeCompletion call down to the subordinate coordinator. Work needed on the TS side: none for beforeCompletion as it's already exposed. Some to expose afterCompletion and allow some way for commit|rollback to indicate afterCompletion processing should be deferred until an explicit call for it is made, as they currently bundle it in automatically and must continue to do so in the default JCA case.

I like solution #2 for now (single sync in the parent), for speed and simplicity (it is more or less what I have already planned on doing); adding functionality for propagating calls up the chain can add a lot of complexity (thus risk given the short timeframe) for us. If later on we discover we need two phases, then moving to #1 later on should be not be a problem, though it could add significant latency.

The only thing I'd be concerned about is the extra work that you'd have to do for exposing this hook, but it shouldn't block us as long as it appears sometime before the EAP final release (we can just mark the source with comments at the appropriate location; we should be able to test most functionality without it).

This is what I was attempting to describe above. That said, the limitation with this approach is clear, in the case where there are no synchronizations registered on the subordinate TM, we will still have to call each subordinate TM with a beforeCompletion message as the root TM will not be aware of which subordinates do/don't have sync's registered, a perfomance penalty.

Yeah, I'm okay with that. Later on perhaps we can find a way to optimize that away.

-

10. Re: Remote Txn Inflow: Synchronizations

dmlloyd Sep 30, 2011 1:59 AM (in response to dmlloyd)This is gonna bug me until I fix it...

David Lloyd wrote:

[...]

You could however use integer packing to make more room; in such a scheme, the bqual bits which identify the node would still be an integer sequence but would work like this:

- Values 0000-0111 (0-7): resources 0-7, length is 4 bits

- Values 1000 0000-1011 1111 (0x80-0xBF): resources 8-71, length is 8 bits

- Values 1100 0000 0000-1101 1111 1111 (0xC00-0xDFF): resources 72-583, length is 12 bits

- etc.

For this to work you'd have to treat 0000 as a special padding sequence for the case where only one nybble of the last byte is used. Thus giving:

- 0000: padding

- 0001-0111: resources 0-6

- 1000 0000-1011 1111: resources 7-70

- 1100 0000 0000-1101 1111 1111: resources 71-582

- etc.

-

11. Re: Remote Txn Inflow: Synchronizations

jhalliday Sep 30, 2011 5:46 AM (in response to dmlloyd)> when we start recovery processing on the crashed-and-restored node, whatever mechanism we use to reconstitute the XAResource(s) could also be used to restore the Synchronizations.

Won't work I'm afraid. The XAResources are disposed of at the end of commit processing. afterCompletions run after that, hence the name. So, no XAResource to hook in to at recovery time. Given that the remote comms protocol is one way, you need a top down discovery mechanism to identify orphaned afterCompletions in a still running subordinate during recovery of a previously crashed parent, or some timeout like mechanism in the child. It's basically a simpler analog of the problem that XAResource.recover is designed to solve for resources.

-

12. Re: Remote Txn Inflow: Synchronizations

tomjenkinson Sep 30, 2011 5:47 AM (in response to dmlloyd)Hi David,

If you are happy do wait for JTA interposed Synchronization behavior, but do eventually want the same, may I ask you to raise a Jira for it? You can assign it to me if you like and mark it is a dependency of: https://issues.jboss.org/browse/JBTM-895. If you want, you can mark it as a fix for 4.15.x

Tom

-

13. Re: Remote Txn Inflow: Synchronizations

tomjenkinson Sep 30, 2011 7:04 AM (in response to dmlloyd)David Lloyd wrote:

Tom Jenkinson wrote:

Hi David,

It looks like we would need at least two additional functions to XATerminator, in the diagram referred to as "XA Terminator enhancement":

a. A before completion method

b. An after completion method

Just a quick note here that we can use other classes than XATerminator; there is no need for this particular code (i.e. the EJB server transaction inflow code) to be loosely coupled from the transaction manager, so we should implement the most direct and most correct solution, which probably means just bypassing XATerminator and using the APIs consumed by it. In fact we are already planning on doing so because EJB invocation isn't done with "Work" which is presently the way that tasks are executed via JBossXATerminator instances.

Sure, of course.

David Lloyd wrote:

Yeah that should be fine, just let me know what the method hooks will be. I'm also fine with using SubordinationManager directly, or providing an alternative extended version of com.arjuna.ats.internal.jta.transaction.arjunacore.jca.XATerminatorImple, or whatever is easiest for you guys. In particular, at a protocol level it does us no good to have all the execptions mapped to XAException because we'll just have to decode it again and send it over the wire; it just costs an extra, useless transaction wrapping.

I don't think we should expose SubordinationManager directly as we don't necessarily want to make that a public API. As such we should probably expose a new API specific to the job, I have posted this on the other thread so people watching that thread only will get the update.

-

14. Re: Remote Txn Inflow: Synchronizations

marklittle Sep 30, 2011 9:00 AM (in response to tomjenkinson)Or you do it lazily when the first synchronization is added locally. That's what we do in the JTS. Check it out.

Tom Jenkinson wrote:

This is what I was attempting to describe above. That said, the limitation with this approach is clear, in the case where there are no synchronizations registered on the subordinate TM, we will still have to call each subordinate TM with a beforeCompletion message as the root TM will not be aware of which subordinates do/don't have sync's registered, a perfomance penalty.