Auditing large collections with envers - out of memory error (heap)

qmnonic Aug 24, 2012 1:00 PMMy scenario:

I'm importing medium sized datasets, approximately 150,000 rows of data (events). Each row is fairly small (a few kilobytes of data).

Hibernate relationship is a ManyToMany, dataset->event.

{code}@Audited

public class Dataset implements Comparable, Serializable {

... non relevant properties excluded ...

// Each dataset represents a collection of events.

@ManyToMany(mappedBy = "datasets", fetch = FetchType.LAZY)

@OrderBy("dateStartMillis asc")

private Set<Event> events = new HashSet<Event>();

}{code}

{code}@Audited

public class Event implements Comparable, Serializable {

... non relevant properties excluded ...

@ManyToMany(fetch = FetchType.EAGER, cascade= { javax.persistence.CascadeType.MERGE })

@JoinTable(name="dataset_events",

inverseJoinColumns = @JoinColumn(name="dataset_id", referencedColumnName="id"),

joinColumns = @JoinColumn(name="event_id", referencedColumnName="id")

)

private Set<Dataset> datasets = new HashSet<Dataset>();

}{code}

I've optimized the collection relationship such that Event owns the relationship (i.e. setting the inverse or mappedby property), thus won't occupy much memory.

I'm batching/flushing as per hibernate documentation.

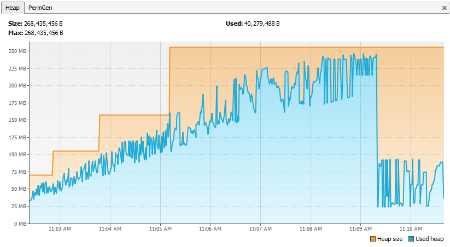

With envers auditing on, the heap grows until it explodes. In the example below, it's 55,000 rows inserted into a fresh database.

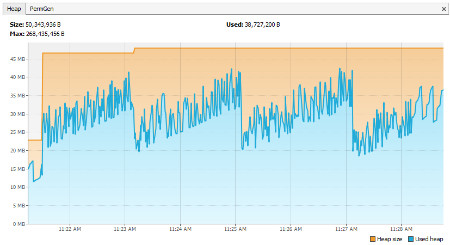

With envers auditing off, with the same dataset (55k rows) and a fresh db, the heap is fairly flat.

Originally, when dataset owned the relationship, I experienced the same heap growth/explosion problem.

My envers configuration is as follows:

{code}<property name="org.hibernate.envers.audit_table_suffix" value="_history" />

<property name="org.hibernate.envers.revision_field_name" value="revision" />

<property name="org.hibernate.envers.revision_type_field_name" value="revision_action" />

<property name="org.hibernate.envers.revision_on_collection_change" value="false" /> <!-- helps resolve memory issues when working with large datasets? -->

<property name="org.hibernate.envers.use_revision_entity_with_native_id" value="false" />

<property name="org.hibernate.envers.audit_strategy" value="org.hibernate.envers.strategy.DefaultAuditStrategy"/>{code}

I tried with both hibernate/envers version 3.6.9 and version 4.1.6. Both behave the same way.

Any advice as to how to correct/deal with the issue? I want to get this thing working... I'm out of ideas.

FYI - Increasing the heap size isn't really an option, as I expect larger and larger datasets.

Matt.