Best way to configure ModeShape & Hibernate Search so that indexes are stored in Infinispan

hchiorean Apr 2, 2013 12:46 PMAs you may know, ModeShape has been offering for some time now the possibility of configuring its indexes to be stored in Infinispan, via Hibernate Search's Infinispan integration. However, we've been having an increasing number of issues with this feature, both when running in a clustered or a non-clustered environment.

The purpose of this thread is to accumulate the knowledge gained so far on this topic and hopefully figure out a way to properly & reliably configure the Infinispan caches, so that indexing works in various setups.

ModeShape - Hibernate Search integration

ModeShape uses Hibernate Search in a pretty simple way which is made up of 2 key areas:

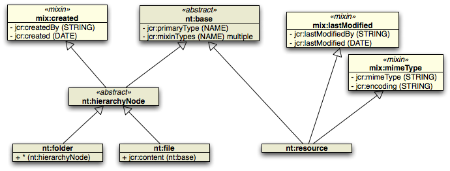

- defining the indexing name & structure - this is done via the https://github.com/ModeShape/modeshape/blob/master/modeshape-jcr/src/main/java/org/modeshape/jcr/query/lucene/basic/NodeInfo.java class

- submitting indexing tasks (via Hibernate Search unit of works): https://github.com/ModeShape/modeshape/blob/master/modeshape-jcr/src/main/java/org/modeshape/jcr/query/lucene/basic/BasicLuceneSchema.java#L387

And that's pretty much it, the rest depends on how Hibernate Search is configured.

However, one very important aspect of the way indexes are defined, is that for each an every repository node that gets indexed, ModeShape will always use the same index (same index name).

Hibernate Search - Infinispan local cache configuration

It seems that even when running 1 local node, indexing can behave quite differently based on how the index caches are configured. There are 3 caches used for indexing: "index-data", "index-locks" and "index-metadata". What we've seen so far is that using various transactional combinations of those caches, can result in different issues (ranging from lock timeouts to index data corruption).

More information on this topic can be found here: https://issues.jboss.org/browse/MODE-1876

Hibernate Search - Infinispan clustered setup using multiple index writers

Problems with this setup are described in more detailed in issues such as https://issues.jboss.org/browse/MODE-1843 or https://issues.jboss.org/browse/MODE-1845.

Based on those and the fact that using the same index writer will always result in contention of the same index write lock (as a cache entry in Infinispan), it seems that the only possible way to cluster multiple index-writing nodes is to use a JMS master-slave configuration, where in effect only the master node will do the index updating. However, this may still exhibit the issues of a local, non-clustered node if the caches are not configured properly.