Memory problems in paging mode makes server unresponsive

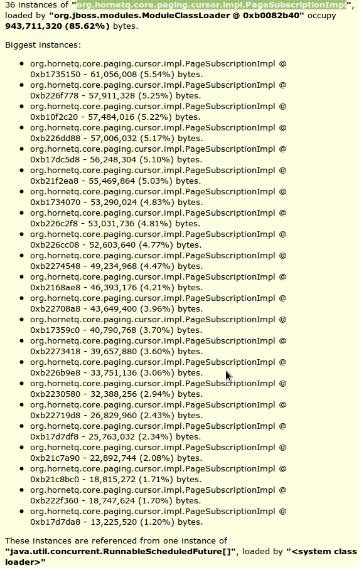

mlange Feb 12, 2014 2:50 PMWe are currently testing the paging mode (HornetQ 2.2.23 part of eap 6.0.1). In our use cases it could happen that consumers become temporarily unavailable. For queues with high throughputs this might end up with millions of messages paged to disk. I am not able to configure the system to be stable in such a scenario.

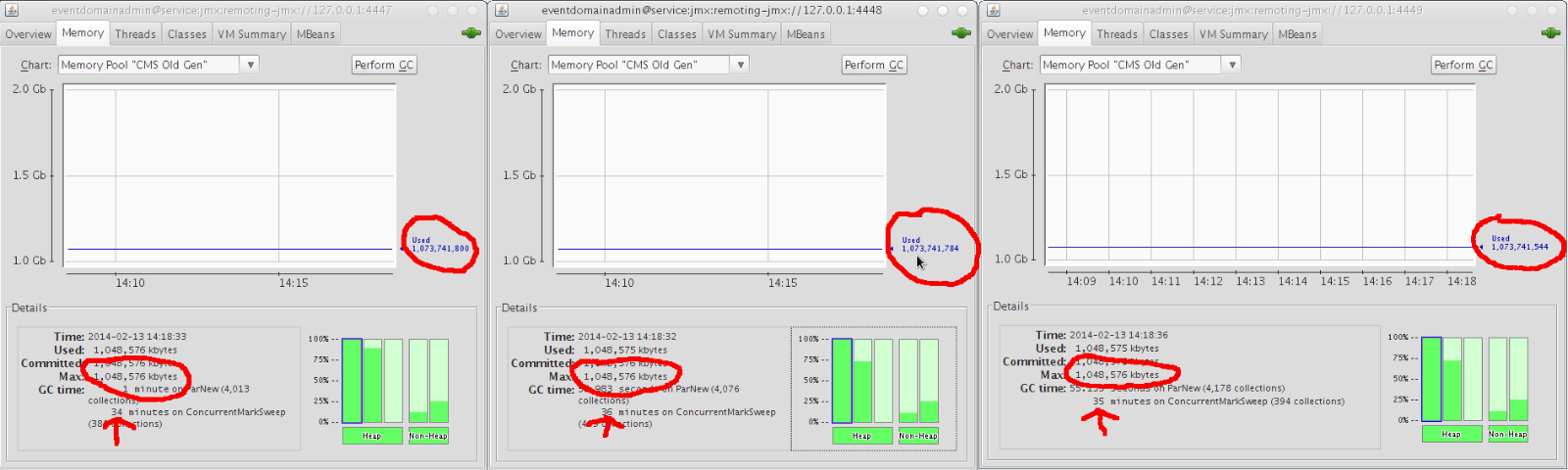

We have a 3-node clustered setup with clients being load-balanced to talk to all nodes using plain JMS. NIO is used for the journal and netty. The servers have max heaps of 1,5G each. The nodes are configured to page when 500MB of memory are consumed:

<clustered>true</clustered>

<persistence-enabled>true</persistence-enabled>

<jmx-management-enabled>true</jmx-management-enabled>

<persist-id-cache>false</persist-id-cache>

<failover-on-shutdown>true</failover-on-shutdown>

<shared-store>true</shared-store>

<journal-type>NIO</journal-type>

<journal-buffer-timeout>16666666</journal-buffer-timeout>

<journal-buffer-size>5242880</journal-buffer-size>

<journal-file-size>10485760</journal-file-size>

<journal-min-files>10</journal-min-files>

<netty-acceptor name="netty" socket-binding="messaging">

<param key="use-nio" value="true"/>

<param key="batch-delay" value="50"/>

<param key="direct-deliver" value="false"/>

</netty-acceptor>

<!-- 500MB memory limit per address -->

<max-size-bytes>524288000</max-size-bytes>

<page-size-bytes>52428800</page-size-bytes>

<address-full-policy>PAGE</address-full-policy>

In the test only one address spawning 30 queues is used.

Producing and consuming with a lot of clients works like a charm. Producing messages without consuming them causes big problems. The old generation space is exhausted way too fast so that the server is doing nothing but garbage collection. The server logs reveal these timeouts on the QueueImpl:

20:27:37,216 WARN [org.hornetq.core.server.impl.QueueImpl] (New I/O server worker #2-22) Timed out on waiting for MessageCount: java.lang.IllegalStateException: Timed out on waiting for MessageCount

The clients get timeouts:

javax.jms.JMSException: Timed out waiting for response when sending packet 43

at org.hornetq.core.protocol.core.impl.ChannelImpl.sendBlocking(ChannelImpl.java:302)

It seems that the problems start once the messages are paged to disk (paging directory has a size of ~300MB). At that point it is almost impossible to start a new consumer on the queues. Message producing works only partly and very slowly compared to the rates when messages are consumed in parallel. Message consuming is considerably slow for the consumers still available.

Thread dump reveals some of these:

"New I/O server worker #2-1" prio=10 tid=0x000000000144a800 nid=0x1dea waiting on condition [0x00007fb68de08000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x00000000b0ab59f8> (a java.util.concurrent.Semaphore$NonfairSync)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:186)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt(AbstractQueuedSynchronizer.java:834)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedInterruptibly(AbstractQueuedSynchronizer.java:994)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireSharedInterruptibly(AbstractQueuedSynchronizer.java:1303)

at java.util.concurrent.Semaphore.acquire(Semaphore.java:317)

at org.hornetq.core.persistence.impl.journal.JournalStorageManager.beforePageRead(JournalStorageManager.java:1689)

"New I/O server worker #2-13" prio=10 tid=0x0000000001f30800 nid=0x1e3e runnable [0x00007fb69972b000]

java.lang.Thread.State: RUNNABLE

at sun.misc.Unsafe.setMemory(Native Method)

at sun.misc.Unsafe.setMemory(Unsafe.java:529)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:132)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:306)

at org.hornetq.core.journal.impl.NIOSequentialFileFactory.allocateDirectBuffer(NIOSequentialFileFactory.java:108)

at org.hornetq.core.persistence.impl.journal.JournalStorageManager.allocateDirectBuffer(JournalStorageManager.java:1709)

at org.hornetq.core.paging.impl.PageImpl.read(PageImpl.java:119)

at org.hornetq.core.paging.cursor.impl.PageCursorProviderImpl.getPageCache(PageCursorProviderImpl.java:190)

at org.hornetq.core.paging.cursor.impl.PageCursorProviderImpl.getMessage(PageCursorProviderImpl.java:126)

at org.hornetq.core.paging.cursor.impl.PageSubscriptionImpl.queryMessage(PageSubscriptionImpl.java:607)

at org.hornetq.core.paging.cursor.PagedReferenceImpl.getPagedMessage(PagedReferenceImpl.java:73)

- locked <0x00000000d588d2d0> (a org.hornetq.core.paging.cursor.PagedReferenceImpl)

Is there anything that can be done so that the memory is not exhausted in such a quick manner? Am I missing sth. important to configure for this paging use case?

Thanks!

Marek

Message was edited by: Marek Neumann added HornetQ version