Memory exhaustion issue

dalbani Sep 23, 2014 6:19 AMHello,

I've recently started to use ModeShape to store various documents, according to a CND-defined schema.

I plan to deploy a (small) cluster of ModeShape instances, in order to get high availability.

So I've configured ModeShape to use a Infinispan-backed storage.

But I've been struggling with a memory exhaustion problem that is totally incomprehensible to me.

Basically, the JVM consumes all of its heap memory very rapidly and then crashes with an OOM exception.

There must be something obviously wrong in my set-up but I just can't see what and where.

Here's an extract of my configuration (in this case with a LevelDB cache store, but I've also tested with a BDBJE or single file store as well):

<cache-container name="modeshape" default-cache="sample" module="org.modeshape" aliases="modeshape modeshape-cluster">

<transport lock-timeout="60000"/>

<replicated-cache name="myapp" start="EAGER" mode="SYNC">

<transaction mode="NON_XA"/>

<store class="org.infinispan.persistence.leveldb.configuration.LevelDBStoreConfigurationBuilder" preload="false" passivation="false" fetch-state="true" purge="false">

<property name="location">

${jboss.server.data.dir}/modeshape/store/myapp-${jboss.node.name}/data-

</property>

<property name="expiredLocation">

${jboss.server.data.dir}/modeshape/store/myapp-${jboss.node.name}/expired-

</property>

<property name="compressionType">

SNAPPY

</property>

<property name="implementationType">

JNI

</property>

</store>

</replicated-cache>

</cache-container>

<cache-container name="modeshape-binary-cache-container" default-cache="binary-fs" module="org.modeshape" aliases="modeshape-binary-cache">

<transport lock-timeout="60000"/>

<replicated-cache name="myapp-binary" start="EAGER" mode="SYNC">

<transaction mode="NON_XA"/>

<store class="org.infinispan.persistence.leveldb.configuration.LevelDBStoreConfigurationBuilder" preload="false" passivation="false" fetch-state="true" purge="false">

<property name="location">

${jboss.server.data.dir}/modeshape/binary-store/myapp-binary-data-${jboss.node.name}/data-

</property>

<property name="expiredLocation">

${jboss.server.data.dir}/modeshape/binary-store/myapp-binary-data-${jboss.node.name}/expired-

</property>

<property name="compressionType">

SNAPPY

</property>

<property name="implementationType">

JNI

</property>

</store>

</replicated-cache>

<replicated-cache name="myapp-binary-metadata" start="EAGER" mode="SYNC">

<transaction mode="NON_XA"/>

<store class="org.infinispan.persistence.leveldb.configuration.LevelDBStoreConfigurationBuilder" preload="false" passivation="false" fetch-state="true" purge="false">

<property name="location">

${jboss.server.data.dir}/modeshape/binary-store/myapp-binary-metadata-${jboss.node.name}/data-

</property>

<property name="expiredLocation">

${jboss.server.data.dir}/modeshape/binary-store/myapp-binary-metadata-${jboss.node.name}/expired-

</property>

<property name="compressionType">

SNAPPY

</property>

<property name="implementationType">

JNI

</property>

</store>

</replicated-cache>

</cache-container>

...

<repository name="myapp" cache-name="myapp" anonymous-roles="admin">

<node-types>

<node-type>

myapp.cnd

</node-type>

</node-types>

<workspaces>

<workspace name="default"/>

</workspaces>

<cache-binary-storage data-cache-name="myapp-binary" metadata-cache-name="myapp-binary-metadata" cache-container="modeshape-binary-cache-container"/>

<text-extractors>

<text-extractor name="tika-extractor" classname="tika" module="org.modeshape.extractor.tika"/>

</text-extractors>

</repository>

After having struggled (due to OOM exception) to insert my documents into the repository, here's a simple function that also makes the JVM crash:

String expression =

"SELECT file.* " +

"FROM [nt:file] AS file " +

"INNER JOIN [nt:resource] AS content " +

"ON ISCHILDNODE(content, file) " +

"WHERE content.[jcr:mimeType] = 'application/zip'";

QueryManager queryManager = session.getWorkspace().getQueryManager();

Query query = queryManager.createQuery(expression, Query.JCR_SQL2);

QueryResult queryResult = query.execute();

NodeIterator nodeIterator = queryResult.getNodes()

while (nodeIterator.hasNext()) {

Node fileNode = nodeIterator.nextNode();

Node contentNode = fileNode.getNode(JcrConstants.JCR_CONTENT);

Binary binary = contentNode.getProperty(JcrConstants.JCR_DATA).getBinary();

File tempFile = File.createTempFile("binary", null);

tempFile.deleteOnExit();

FileOutputStream fileOutputStream = new FileOutputStream(tempFile);

IOUtils.copyLarge(binary.getStream(), fileOutputStream);

binary.dispose();

fileOutputStream.close();

tempFile.delete();

}

It basically saves data from (sometimes large) ZIP files located in the repository to disk temporarily.

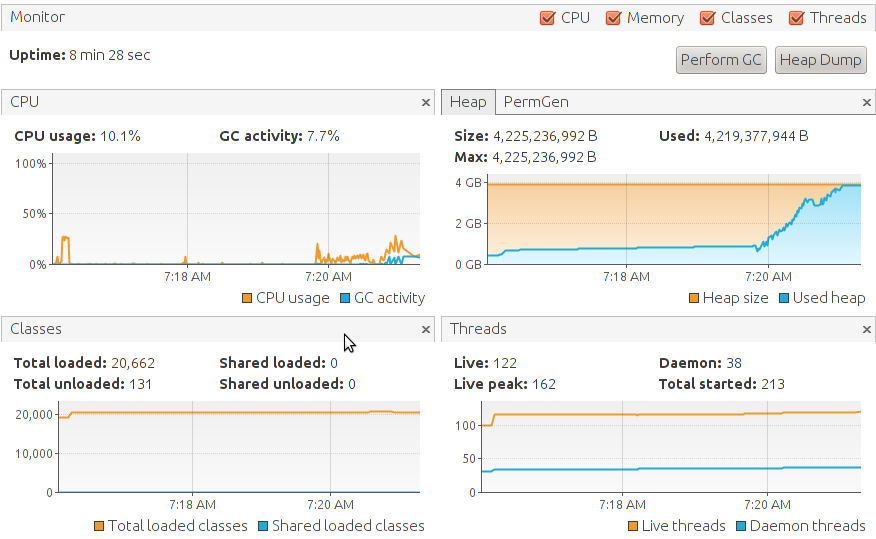

Here's what the memory consumption pattern looks like in Visual VM:

My Java debugging skills being what they are, I couldn't determine what is the origin of this continuous memory increase.

I only managed to see in jhat that there are tons of [B (array of bytes) and [C (array of characters) filling up the heap.

I've done my tests with both Oracle and OpenJDK, with various GC and heap size parameters.

I'm running ModeShape 4.0.0.Beta1 on Wildfly 8.1, on Ubuntu 14.04 inside an LXC container (if that matters).

Thanks for your help because I've run out of ideas