JMS consumer performance regression in HornetQ 2.4 during consumer acknowledge() call

benspiller Jan 13, 2015 11:34 AMWe're looking at upgrading the JMS provider we recommend from HornetQ 2.3.0 to 2.4.0, but are seeing significant (~50%) regressions in number of messages received per second from a queue. For now we're focusing on NON_PERSISTENT messages, and we use CLIENT_ACK mode (as recommended by the HornetQ docs) and batch up many calls to consumer.receive() before calling to acknowledge to reduce the cost of the acknowledgements.

We did some profiling and it looks as though the change in 2.4 is that vastly more time is taken during the acknowledge() call, so I was wondering if some performance (or functionality) changes were made recently in this area that could be related?

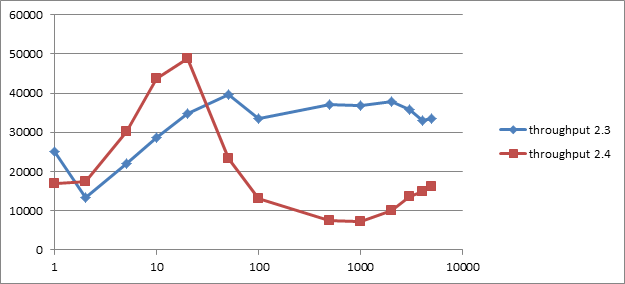

I also had a go at varying the number of receive() calls we make before calling acknowledge() and found that:

i) it makes a huge difference to throughput (much more than I expected actually)

ii) batch sizes >=100 receives/ack actually make performance worse rather than better - surprising, as I'd have thought going back to the server less often for the ack would help performance. I wonder what data structure there is there that's causing it to drop off so fast

iii) there is a big difference in the impact of this batch size from 2.3 to 2.4 - instead of performance improving then becoming relatively constant as batch size is increases (in 2.3), in 2.4 we see higher throughput for very low batch size but then after 20 msg/batch it very quickly drops off to become much worse than 2.3 performance, and in fact continue to deteriorate as batch size is increased:

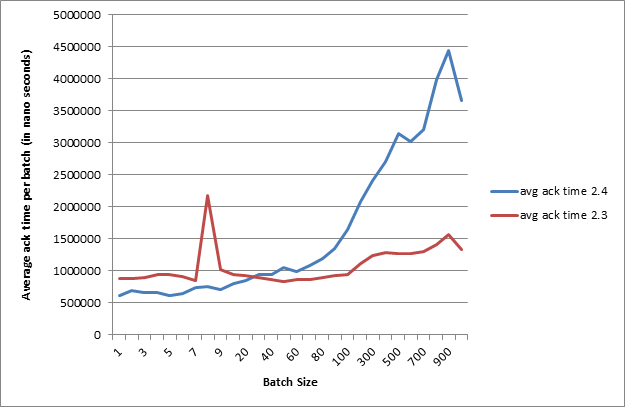

We also had a go at measuring the average time that each acknowledge() call takes, and again found not only that it's worse for high batch sizes in 2.4, but also that the shape of the graph is quite different with performance falling off very rapidly for higher batch sizes:

I'd be very interested to hear thoughts from the dev team on these observations. If it's possible it'd be great to get a fix that returns similar behaviour to the last release (but I wanted to get your initial thoughts before filing a bug)

I also wonder whether it would be a good idea to have a section in the performance tuning part of the HornetQ docs about the effect of batch size on performance, and what you recommend. With most other JMS providers I've found performing about 500 receives per acknowledgement gives the best performance and HornetQ (at least for now) seem to behave differently, so it'd be useful to document what you expect/recommend.

Thanks!