-

1. Re: Messaging failover without clustering?

jbertram May 26, 2015 11:03 AM (in response to bcremers)Check out the documentation on this specific subject.

-

2. Re: Messaging failover without clustering?

bcremers May 26, 2015 11:44 AM (in response to jbertram)I've been reading that documentation page and been trying to implement it, without success.

I'll try to explain our setup. We've got servers, both running a standalone wildfly instance and also both running a hornetq broker in a separate vm.

We would like to make both wildfly instances consume message from the same "live" broker for most queues, but we've got some "local" queues deployed to keep some work on the same server (no failover for these queues).

When our "live" broker fails, the non-"local" consumers should be switched to the backup broker.

Below the configuration I've been trying, without success. Shutting down the live broker does not result in the consumers switching to the backup broker.

As for a reason why we're trying this is because we are mirgrating our application from a Sun Application Server 9/Sun IMQ setup to a WildFly 8.2/HornetQ setup. In the former, this setup was working and we would like to achieve the same on the new setup. Later on we might bring clustering on both WildFly and HornetQ in the picture, but at the moment we are not ready for this yer.

<?xml version='1.0' encoding='UTF-8'?> <server xmlns="urn:jboss:domain:2.2"> <!-- Removed for brevity --> <management> <!-- Removed for brevity --> </management> <profile> <subsystem xmlns="urn:jboss:domain:messaging:2.0"> <hornetq-server name="live-server"> <failover-on-shutdown>true</failover-on-shutdown> <allow-failback>true</allow-failback> <journal-file-size>102400</journal-file-size> <check-for-live-server>true</check-for-live-server> <shared-store>false</shared-store> <connectors> <http-connector name="http-connector" socket-binding="http"> <param key="http-upgrade-endpoint" value="http-acceptor"/> </http-connector> <http-connector name="http-connector-throughput" socket-binding="http"> <param key="http-upgrade-endpoint" value="http-acceptor-throughput"/> <param key="batch-delay" value="50"/> </http-connector> <netty-connector name="netty-remote" socket-binding="messaging-remote"/> <netty-connector name="netty-local" socket-binding="messaging-local"/> <in-vm-connector name="in-vm" server-id="0"/> </connectors> <acceptors> <http-acceptor http-listener="default" name="http-acceptor"/> <http-acceptor http-listener="default" name="http-acceptor-throughput"> <param key="batch-delay" value="50"/> <param key="direct-deliver" value="false"/> </http-acceptor> <in-vm-acceptor name="in-vm" server-id="0"/> </acceptors> <jms-connection-factories> <pooled-connection-factory name="hornetq-ra"> <transaction mode="xa"/> <connectors> <connector-ref connector-name="netty-remote" /> </connectors> <entries> <entry name="java:jboss/DefaultJMSConnectionFactory"/> <entry name="java:/jms/XAIMQConnectionFactory"/> <entry name="java:/jms/MQConnectionFactory"/> </entries> </pooled-connection-factory> <pooled-connection-factory name="hornetq-ra-local"> <transaction mode="xa"/> <connectors> <connector-ref connector-name="netty-local"/> </connectors> <entries> <entry name="java:/jms/XALocalIMQConnectionFactory"/> <entry name="java:/jms/XATopicConnectionFactory"/> </entries> </pooled-connection-factory> <pooled-connection-factory name="hornetq-ra-noTX"> <transaction mode="none"/> <connectors> <connector-ref connector-name="netty-local"/> </connectors> <entries> <entry name="java:/jms/NoTransactionIMQConnectionFactory"/> </entries> </pooled-connection-factory> </jms-connection-factories> <jms-destinations> <!-- Shared Queue, both wildfly servers are consuming this --> <jms-queue name="BatchQueue"> <entry name="java:/jms/BatchQueue"/> </jms-queue> <!-- Non-shared Queue, only the local wildfly server is consuming this --> <jms-queue name="LocalBatchQueue"> <entry name="java:/jms/LocalBatchQueue"/> </jms-queue> <!-- Non-shared durable topic, only the local wildfly server is consuming this --> <jms-topic name="ChangeTopic"> <entry name="java:/jms/ChangeTopic"/> </jms-topic> </jms-destinations> </hornetq-server> <hornetq-server name="backup-server"> <backup>true</backup> <failover-on-shutdown>true</failover-on-shutdown> <allow-failback>true</allow-failback> <check-for-live-server>true</check-for-live-server> <!--<shared-store>false</shared-store>--> <connectors> <netty-connector name="netty-remote" socket-binding="messaging-remote-backup"/> <netty-connector name="netty-local" socket-binding="messaging-local-backup"/> </connectors> </hornetq-server> </subsystem> </profile> <interfaces> <interface name="management"> <inet-address value="${jboss.bind.address.management:127.0.0.1}"/> </interface> <interface name="public"> <inet-address value="${jboss.bind.address:127.0.0.1}"/> </interface> <interface name="unsecure"> <inet-address value="${jboss.bind.address.unsecure:127.0.0.1}"/> </interface> </interfaces> <socket-binding-group name="standard-sockets" default-interface="public" port-offset="${jboss.socket.binding.port-offset:0}"> <socket-binding name="management-http" interface="management" port="${jboss.management.http.port:9990}"/> <socket-binding name="management-https" interface="management" port="${jboss.management.https.port:9993}"/> <socket-binding name="ajp" port="${jboss.ajp.port:8009}"/> <socket-binding name="http" port="${jboss.http.port:8080}"/> <socket-binding name="https" port="${jboss.https.port:8443}"/> <socket-binding name="jacorb" interface="unsecure" port="3528"/> <socket-binding name="jacorb-ssl" interface="unsecure" port="3529"/> <socket-binding name="messaging-group" port="0" multicast-address="${jboss.messaging.group.address:231.7.7.7}" multicast-port="${jboss.messaging.group.port:9876}"/> <socket-binding name="txn-recovery-environment" port="4712"/> <socket-binding name="txn-status-manager" port="4713"/> <outbound-socket-binding name="mail-smtp"> <remote-destination host="localhost" port="25"/> </outbound-socket-binding> <outbound-socket-binding name="messaging-remote"> <remote-destination host="server-1-address" port="5455"/> </outbound-socket-binding> <outbound-socket-binding name="messaging-remote-backup"> <remote-destination host="server-2-address" port="5455"/> </outbound-socket-binding> <outbound-socket-binding name="messaging-local"> <remote-destination host="127.0.0.1" port="5455"/> </outbound-socket-binding> </socket-binding-group> <deployments> <!-- Removed for brevity --> </deployments> </server> -

3. Re: Messaging failover without clustering?

jbertram May 26, 2015 12:20 PM (in response to bcremers)I don't really understand your set up the way you've described it.

You said you don't need fail-over for the "local" queues running in the Wildfly instances, but that is where you've configured your live and backup. Based on your description I would have expected you to have the default HornetQ configuration on your Wildfly instances and then a live/backup configuration for the remote HornetQ brokers.

Also, the only reason to configure both a live and a backup HornetQ broker in the same Wildfly instance is for a colocated setup which enables both HA and clustering functionality, but you said you don't want clustering functionality.

-

4. Re: Messaging failover without clustering?

bcremers May 27, 2015 4:34 AM (in response to jbertram)I'll try to further explain the setup.

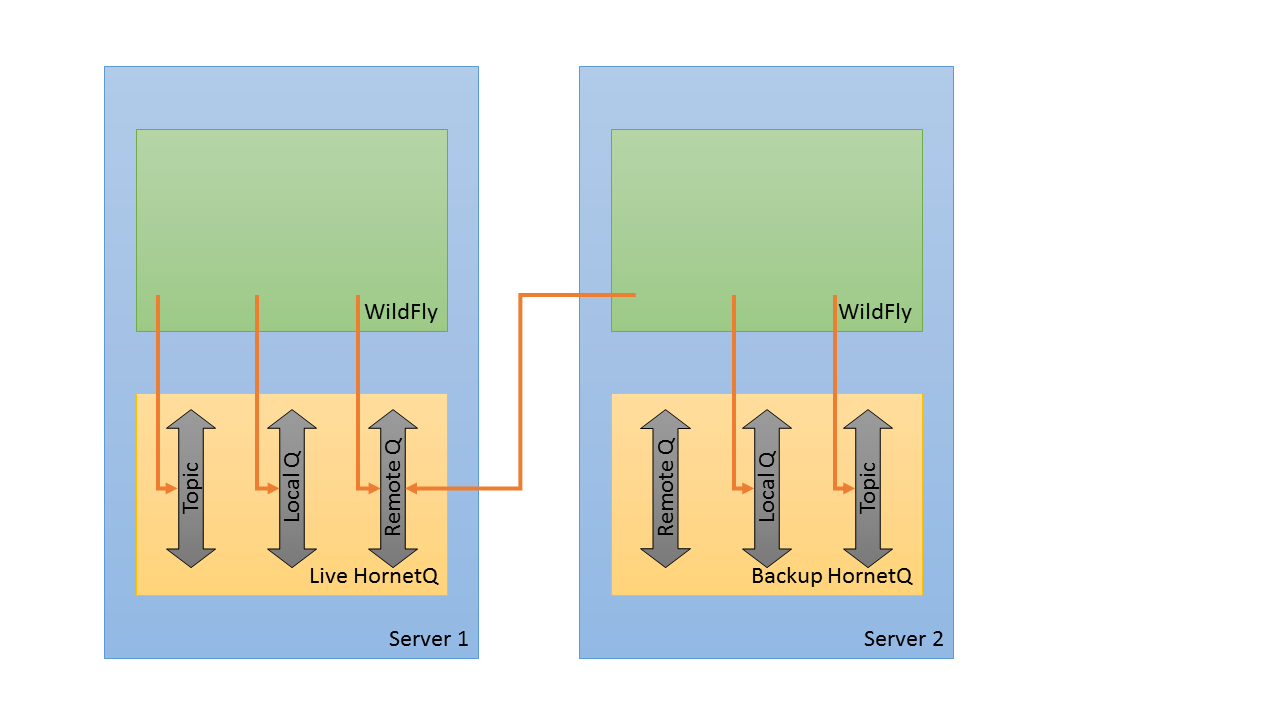

Below is a drawing of the "normal" situation. We've got 2 virtual servers, both running the same application on a WildFly. A loadbalancer will distribute the load over both servers.

Both servers also have a HornetQ instance running, with the same queues configured. We got some "local" queues running where only the WildFly on the same server will connect to.

We also got some "remote" queues where every WildFly instance will connect to.

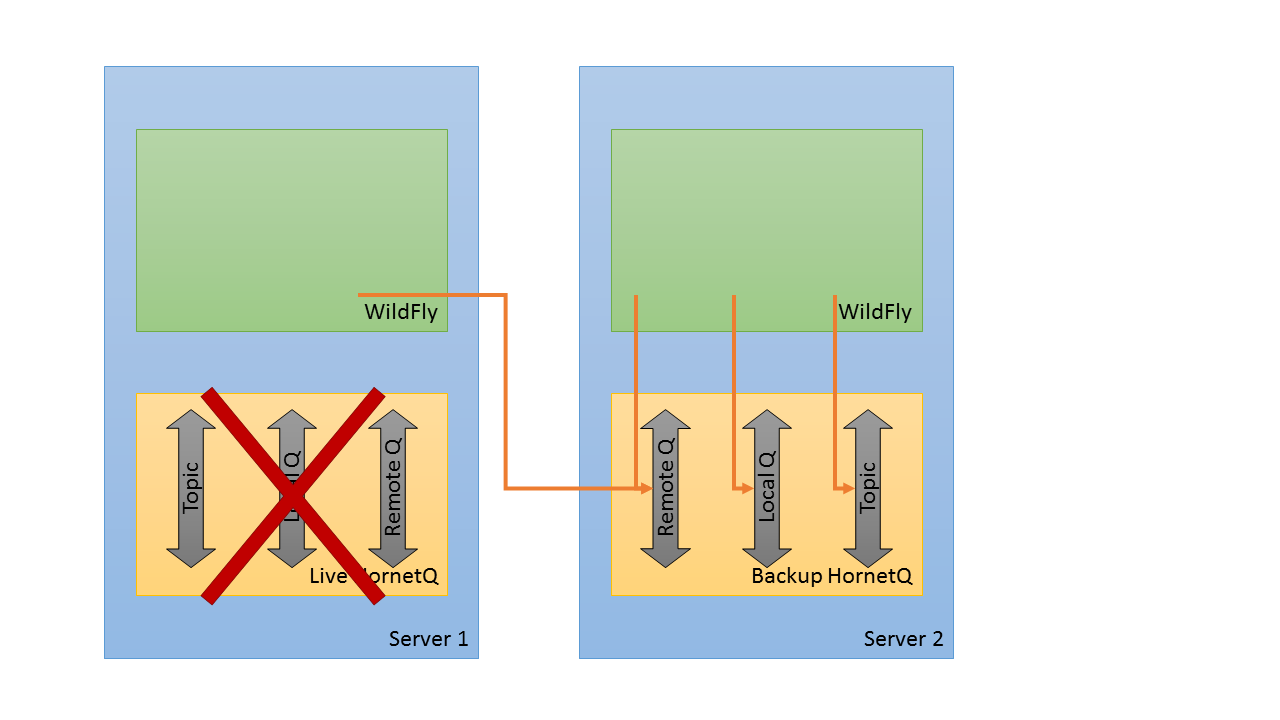

For these queues we need the backup HornetQ. Below the situation we will have if the Live HornetQ goes down.

I was looking at the WildFly configuration because I was thinking WildFly needs to handle the switch to the back HornetQ, because HornetQ itself can't manage this as it may be gone. But I'll look into the HornetQ configuration to see if I can get any further

-

5. Re: Messaging failover without clustering?

jbertram May 27, 2015 10:23 AM (in response to bcremers)The first set of diagrams represents an impossible configuration for a HornetQ live/backup pair because a HornetQ backup is inactive until the live server fails. In other words, there is no possibility of the Wildfly instance on the same server connecting to any "local" destinations on the backup HornetQ instance while the live HornetQ instance is up and running. You could accomplish essentially the same thing however by confining your "local" destinations to a HornetQ instance running in the same JVM as Wildfly (one is configured by default in the standalone-full.xml configuration) and have the remote HornetQ live/backup pair host only the "remote" destinations.

-

6. Re: Messaging failover without clustering?

bcremers Jun 8, 2015 4:22 AM (in response to jbertram)The reason we needed this setup for IMQ is because IMQ did not allow shared durable subscriptions and the distribution of work was not ok. If I understand things correcly, both issues we had should not be present in HornetQ, so I think we are going with a live/backup pair, and sharedSubscriptions for our durable topic.