Tableau client not able to get batches from DV

sanjay_chaturvedi Oct 21, 2018 8:24 AMHI,

Scenario is that we are connecting teiid dv layer from tableau client and making a simple select. That select is heavy and expected to serve around 10 millions of rows.

What we have been struggling for some time is that our query is resulting error with following message:

TEIID31261 Max estimated size 53,219,612,589 for a single operation/table id 120 has been exceeded. The server may need to increase the amount of disk or memory available, or decrease the number of max active plans. Sending error to client 1mqNEk34vl2U.8

We have already decreased max-active plan to 1, still this error is there. We have increased allocated heap, that caused thaaat failure a bit delayed but not resolved completely.

In teiid-command.log, what I observed that bufferspace size is keep on increasing, take a look of series of logs:

Obtained results from connector, current row count: 512

btained results from connector, current row count: 1024

Obtained results from connector, current row count: 1536

Obtained results from connector, current row count: 2048

.........................................................and so on then finally:

Obtained results from connector, current row count: 9791488

Error:

Exceeding buffer limit since there are pending active plans or this is using the calling thread.

My assumption is that if client is keep on fetching based on fetch size, this current row count should come as always what the connector or processor batch size is there. So current row count should always be like 1024,1024 and so on. What am thinking is that possibly connector results in batches is not being served to client in batches. and causing this buffer to be exhausted. Correct me if I am wrong.

processor batch size: 512

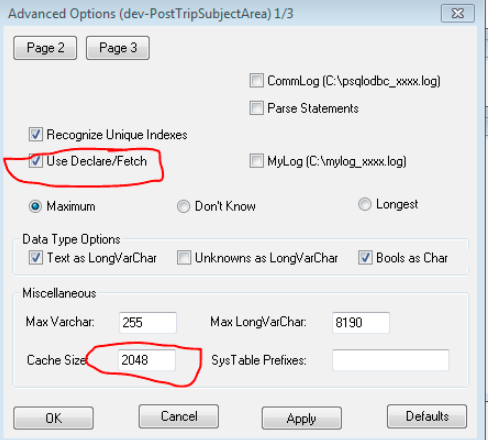

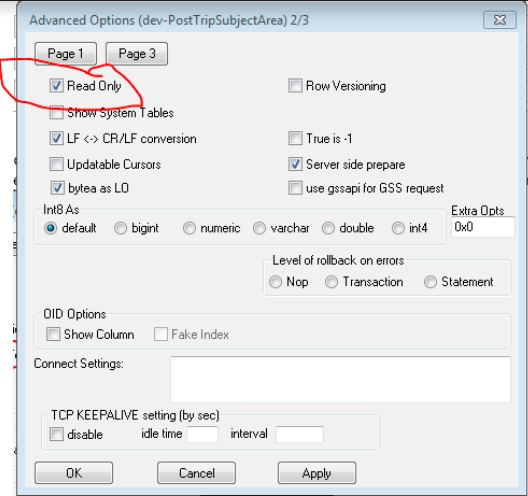

Also in tableau, we could not found some setting like fetch-size or batch-size, was under impression if that size could be set to 0 then possibly whatever batch will come from DV, will get transferred.

Please confirm if my understanding is right, in case it is wrong, what could be the cause and resolution. please assist. Thanks.