Do we need pre-computed aggregates?

john.sanda Oct 13, 2014 3:25 PMI want to first provide some terminology and background to help frame the discussion.

When querying time series data, resolution refers to the number of data points for a given time range. The highest resolution would provide every available data point for a time range. So if I want a query to use the highest resolution and if there are 100 data points, then the query result should include every one of those 100 points.

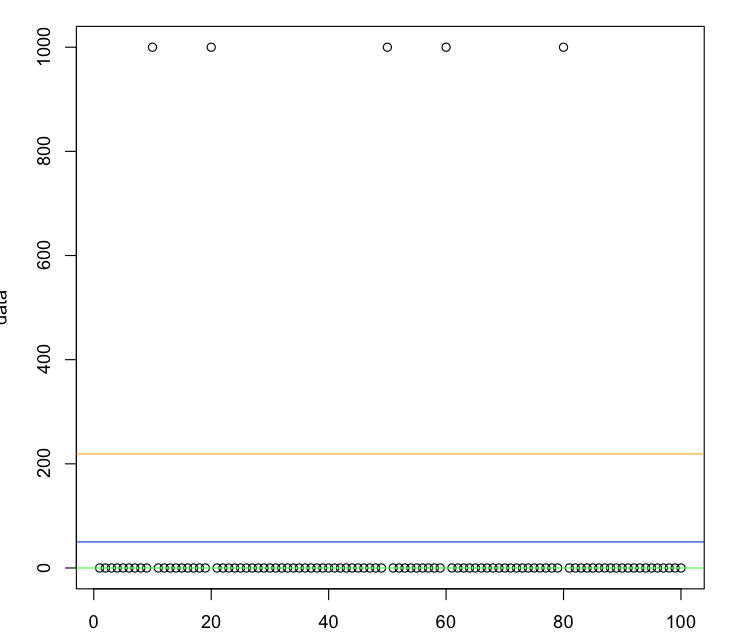

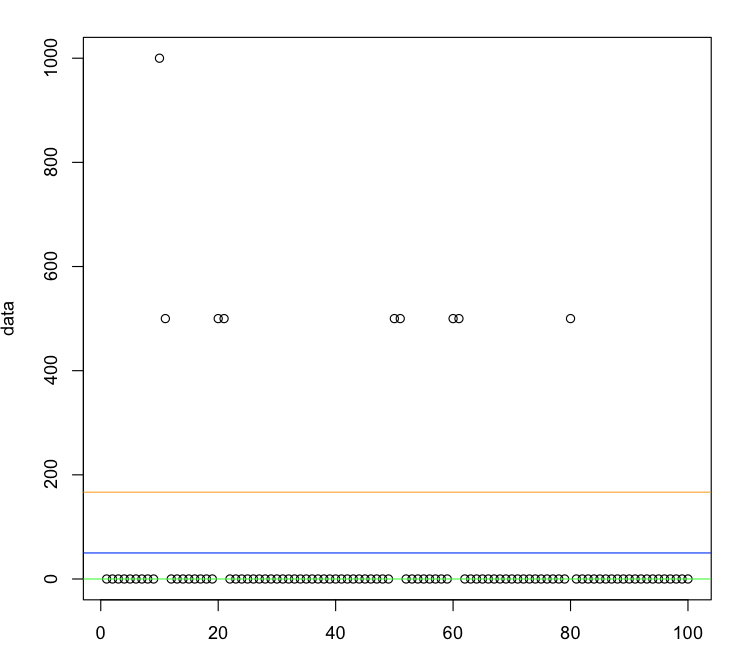

As the number of data points increases, providing results at higher resolutions becomes less effective. For instance, increasing the resolution to the point where a graph in the UI includes 1 million points is probably no more effective than if the graph included only 10,000 or even 1,000 data points. The higher resolution could degrade user experience as rendering time increases. Latency on server response time is also likely to increase.

Downsampling is a technique to provide data at lower resolution. It may involve applying one or more aggregation functions to the time series data across some discrete number of intervals where the sum of intervals' durations equals the duration of the original date range. It should be noted that downsampling is done at query time.

Downsampling is a necessary technique for dealing with high resolution data, but is it sufficient. There are a couple of issue to take under consideration. First, the process itself can become CPU-intensive to the point where it increases latency on response times. Secondly there is the issue of storage. Suppose we lengthen the date range on our queries such that it spans 1 trillion data points. Whether it is 1 million or 1 trillion, at some point storing that many data points for our metrics becomes cost prohibitive.

Pre-computed aggregation is the process of continually downsampling a time series and storing the lower resolution data for future analysis or processing. Pre-computed aggregates are often combined with data expiration/retention policies to address the aforementioned storage problem. Higher resolution data is stored for shorter periods of time than lower resolution data. Pre-computed aggregation can also alleviate the CPU utilization and latency problems. Instead of downsampling 1 million data points, we can query the pre-computed aggregated data points and perform downsampling on 10,000 data points.

The following table provides a summary of a few systems and their support (or lack thereof) for downsampling and pre-computed aggregation.

| System | Supports downsampling? | Supports pre-computed aggregates? | Pre-computed aggregation configurable? |

|---|---|---|---|

| RHQ | Yes | Yes | No |

| OpenTSDB | Yes | No | N/A |

| InfluxDB | Yes | Yes | Yes |

There are plenty of other systems that could be included in this table, but this is enough to be a representative for this discussion at one. At one end of the spectrum we have OpenTSDB that provides no support for pre-computed aggregates. It would have to be completely handled by the client. OpenTSDB only store data at its original resolution. RHQ is at the other end of the spectrum in that it does provide pre-computed aggregates, but it is in no way configurable. There is no way for example to specify for which metrics you want or do not want to generate pre-computed aggregates. Nor is there a way to specify the intervals at which the downsampling is performed. InfluxDB falls in the middle of spectrum in that it does provide pre-computed aggregates which it calls continuous queries in its documentation, and they are configurable. You can define the continuous queries that you want along with the intervals and functions to use.

Back to the original question. Does RHQ Metrics need to provide pre-computed aggregates? I believe that the answer is yes, but it has to be configurable. If we have metrics with retentions as short as a week or even a month, the costs of pre-computed aggregates may outweigh the benefits. But for other metrics whose data we want to keep around longer, possibly indefinitely, then pre-computed aggregates make a lot more sense.