The data computation layer in between the data persistent layer and the application layer is responsible for computing the data from data persistence layer, and returning the result to the application layer. The data computation layer of Java aims to reduce the coupling between these two layers and shift the computational workload from them. The typical computation layer is characterized with below features:

1. Ability to compute on the data from arbitrary data persistence layers, not only databases, but also the non-database Excel, Txt, or XML files. Of all these computations, the key is the computation on the commonest structured data.

2. Ability to perform the interactive computations among various data sources uniformly, not only including the computation among different databases, but also calculation between the databases and non-database data sources.

3. The couplings between database and computation layer, as well as the computation layer and Java code can be kept as low as possible to facilitate the migration.

4. The architecture can be non-Java but should be integrated with Java conveniently.

5. Higher development efficiency in the respects of scripting, readability, debugging, and daily maintenance

6. As for the tendency of the complex computation and big data computation, the computation layer can provide the direct support to achieve the goal.

In this survey, 5 data computation layers of Hibernate, esProc, SQL, iBATIS, and R language are tested and compared on the basis of the below metrics: maturity, low coupling, scripting, integration, UI friendliness, performance, complex computation, support for big data, non-database computation, cross-database computation, and convenience for debugging.

Hibernate

Hibernate is the lightweight ORM frame, which was invented by Gavin King, and is now owned by JBOSS. It is the outstanding computational layer in the non-distributed environment, for example Intranet. Hibernate provides the access mode based on the object completely, while esProc and iBATIS can only be treated as the semi-object or object-alike ones.

Hibernate almost enables the complete uncoupling between computational scripts, Java code, and database completely. However, what’s a pity is that Hibernate still heavily rely on SP/SQL in many respects due to its lack of computational capability.

Besides, the EJB JPA is one of the computation layer protocols. Since Hibernate is actually the JPA, we will not dwell on it here.

Maturity: 4 stars. With more than a decade of market testing, Hibernate is already well developed and mature.

Low coupling: 4 stars. Hibernate was introduced for this advantage. But the local SQL is still unavoidable, and Hibernate is hard to achieve the perfect migration.

Scripting: 2 stars. Hibernate computation modes include the object reference and HQL. The former one can get 5 stars since it is quite easy. The latter one gets 2 starts because it is more difficult than SQL to lean, extremely tough to debug, and less efficient than SQL. Its unavoidable reliance on SQL to handle some computations makes users face the great challenge to use these two languages in combination. Let’s give it 2 stars and overall 3 stars on average.

Integration: 2 stars. Hibernate is built with the pure Java architecture. So, to integrate, just copy the jar package and several mapping files, and pay attention to use the session well. It is easy to start, but far more difficult when it comes to the mandatory Hibernate cache, which demands so extremely strong architectureal design capabilities that the normal programmers are not encouraged to explore it. Needless to say, the inherited disadvantage of ORM does not exist in other computation layers.

UI friendliness: 0 star. Hibernate provides the object generator, but lacks the most important HQL graphic design interface. Almost no usable GUI is available.

Performance: 3 stars. Its support for L3 cache is less capable than that of SQL. But it is equivalent to 60% of SQL all-roundly according to my personal experience.

Complex computation: 0 star. No support is available for the complex computation, and you may need the SQL/external tools.

Support for big data: 1 star. No direct support is available for hadoop architecture, and relevant research has been carried out.

Non-database computation: 0 star. No direct support is available for non-database computation.

Cross-database computation: 0 star. No direct support is available for cross-database computation. Each HQL only supports a single database.

Convenience for debugging: 0 star. The awkwardness in debugging is a fatal drawback for programmers.

esProc:

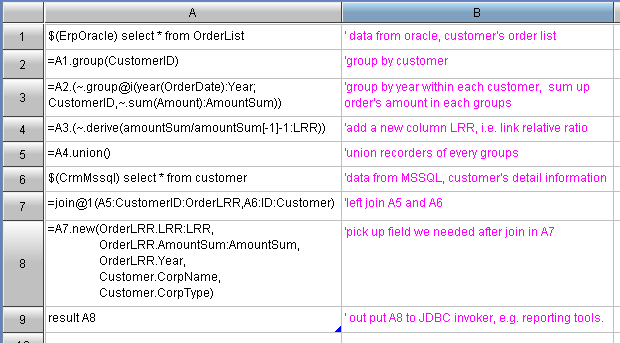

esProc is a newly-emerged Java development tool, especially designed to solve the complex cross-database computations. Unlike other data computation layers, esProc only takes SQL as a data source. Once data is retrieved from SQL, the computation in esProc is completely independent from SQL. By comparison, PJA/Hibernate would be forced to open the interface for SQL to handle some computations they are unable to handle.

Maturity: 1 star. Only 1 year passes since its entering the marketplace. The breadth and depth of its application is worse than other data computation layer.

Low coupling: 4 stars. Its scripts are independent from databases and Java codes, and its algorithms have nothing to do with the concrete database, which can be concluded as the low coupling. Immigration to various databases can be implemented easily. Because the output interface is JDBC, immigration to reports can be achieved easily as well. This is an unmatched feature in comparison to other computation layers.

Scripting: 4 stars. It allows for scripting in grid, reference with cell name, direct verifying result at each step, and decomposing a complex goal to several simple steps. Its syntax focuses on object-reference but no object only. Unlike the descriptive style of SQL statements, the brand new experience requires some knowledge about esProc. Java programmers will have their own choices based on their own experiences.

Integration: 5 stars. A pure Java architecture is adopted by esProc. The JDBC interface is offered for easy integration by any database programmer without esProc background.

UI friendliness: 4 stars. Independent graphic editor is easy to use and intuitive. However, the helpdesk system is not friendly enough.

Performance: 2 stars. All computations are performed in memory. So, it is not recommended to handle too huge amount of data.

Complex computation: 5 stars. This is purpose to develop esProc.

Non-database computation: 3 stars. Support for Excel/Txt, but not XML or Web Service.

Support for big data: 4 stars. HDFS access is offered. Plus, it is said that the parallel computing is also supported, but the details in pending to reveal.

Cross-database computation: 5 stars. esProc syntax is independent from the specific database, and supports the cross-database computation by nature.

Convenience for debugging: 5 stars. The debugging function is perfect and quite convenient to use. Considering its supports for verifying the computation steps of the finest granularity, we can say no other computation layers can offer such convenience.

SQL

SQL/SP/JDBC is of one kind. They are old computation layers boasting the performance and flexibility. However, things change that SQL alone become hard to satisfy the need, such as the growing development of Java, data explosion, and emerging complex problems. Although there are not so many high scores SQL have achieved, the weights are generally the highest.

Maturity: 5 stars. The most mature one.

Low coupling: 0 star. High coupling! Except for lab use, it’s nearly impossible for users to write the computational scripts independent from the databases and codes.

Scripting: 3 stars. SQL is actually very difficult to write and maintain, and cost users a great deal of time to learn. Fortunately, SQL is mature and well established. There are many forums with rich contents. Hibernate becomes popular because it addresses the need to solve the incapability between various data.

Integration: 5 stars. The first lesson of Java programmers is taught to connect the database through JDBC.

UI friendliness: 5 stars. Abundant SQL development tools with high maturity are available. I myself have tried more than 10 tools.

Performance: 5 stars. Databases support this language directly with the highest performance.

Complex computation: 3 stars. SQL is quite fit for the normal computation. The complex problems can be also solved with SQL but a very tough procedure is unavoidable, while Hibernate cannot cope with it at all. SP appears with no great improvement to the reality. The code is hard to split, mainly owing to the complex goal is hard to be decomposed into several simple steps.

Support for big data: 1 star. Several database vendors have declared their support for big data. This adds fuel to the incompatibility of SQL statements, and I’ve never seen a successful case yet.

Non-database computation: 1 star. No direct support is available. ETL/data warehouse can achieve the goal at great cost.

Cross-database computation: 1 star. Support several databases but the performance is poor. In addition, the middleware like DBLink and link server can barely support it to a degree far from “arbitrary and convenient”.

Convenience for debugging: 1 star. It is quite hard for users to debug and check the intermediate results. Only after all scripts have been run can users to check the final result. The only solution is “programing with debugging” which is deliberately creating a great numbers of temporary tables instantly.

iBATIS:

The computation layer is powerful for its simpleness and agility. Unlike Hibernate, iBATIS encourages programmers to write SQL statements. So, its learning cost is the least. In addition, iBATIS has implemented the uncoupling between computational scripts and Java code at the least cost – 80% Hibernate functions at 20% costs. The pending unsolved 20% is the uncoupling between the computational scripts and database.

The complex computation environment is its weak points, for example, distributed computation, complex computation, non-database computation, and cross-database computation.

Maturity: 4 stars. iBATIS is a proven frame with decades of marketing test. It is my favorite frame, though it still has the drawback of insufficient support for cache.

Low coupling: 2 stars. SQL can be replaced seamlessly. However, it’s still SQL for specific database. In facts, this latter one is the database-related problem. Since the vendor is determined to retain the customers, the incompatibility of SQL ensures the inability to migrate. On the other side, the programmers always look for the freedom to migrate by whatsoever means.

Scripting: 3 stars. It is SQL.

Integration: 4 stars. Basically, it’s not difficult. It takes the beginner half a day to grasp it well.

UI friendliness: 4 stars. No graphic design interface for computational procedure is available, but SQL tools can be used instead.

Performance: 3 stars. It’s slightly less powerful than SQL, mainly because the conversion between resultSet and map/list is a bit more time-consuming. In addition, its support for cache is worse than that of Hibernate. By general comparison, their differences are not great. In my opinion, it is a failure to introduce the ORM along with the performance problem.

Complex computation: 3 stars. It’s the same as SQL, and stronger than Hibernate.

Support for big data: 1 star. Same as SQL

Non-database computation: 1 star. Same as SQL

Cross-database computation: 1 star. Same as SQL

Convenience for debugging: 1 star. Same as SQL

R language

R is not easy to integrate with Java. However, it is worth to mention because of its powerful computational capability, wide support for communities and advantages in big data. Needless to say, it is the hardest to learn among all these computation layers.

Maturity: 5 stars. The long history of R is only shorter than that of SQL. R has been a hot topic in many forums, in particular in the age of big data.

Low coupling: 4 stars. R language makes no difference to that of esProc in this respect.

Scripting: 3 stars. In this respect, R is very similar to esProc. But esProc is more agile, more flexible in scripting, and more professional in supporting the structural data, while R has a great number of inbuilt model syntax. Let’s call it even.

Integration: 1 star. R is not built with Java architecture, and hard to integrate with Java. Considering its comparably unsatisfactory performance, the performance would drop dramatically once integrated.

UI friendliness: 3 stars. A specific IDE interface is offered. However, it is not finely built, and suffers the low usability, which is common to all open source products.

Performance: 2 stars. Full memory computation makes it hard to handle large data volume.

Complex computation: 5 stars. It’s similar to esProc.

Support for big data: 3 stars. There is a combination mechanism of using R together with Hadoop. But it is not easy to combine the non-Java system and Java-system, which also compromises the performance greatly.

Non-database computation: 5 stars. It’s similar to esProc.

Cross-database computation: 5 stars. It’s similar to esProc.

Convenience for debugging: 2 stars. The debugging is barely offered and is very unprofessional.