Transactional MSC

paul.robinson Sep 9, 2013 3:54 PMIntroduction

This discussion covers the "Transactional MSC" feature scheduled for a future release of WildFly. Although the feature is discussed in general, the focus of this document is to establish the requirements from Narayana and to discuss implementation details.

Transactional MSC Requirements

There should be a way to manipulate the container atomically in order to guarantee integrity of the container in both standalone and domain managed modes. The types of operations that must be transactional are:

- Modification of configuration values

- Lifecycle management of subsystems.

Each operation has a runtime effect on the in-memory configuration database in the MSC, plus a durable update to a configuration file (i.e “standalone*.xml”)

Failures

Failure to update configuration files (disk space, file system permissions) or modify configuration values must cause the operation to undo updates to all members of the domain.

Failure to deploy subsystems in a server farm is occasionally expected due to port conflicts and other environment issues. These failures should be able to be reported to the user. It is expected that the user will then provide a policy (potentially including manual intervention) to determine whether to complete the update based on the number of expected failures. For example, it may be acceptable to continue the deployment of an application where 30% of application servers have failed to deploy it.

The ability to undo specific branches of the update should not be required assuming the MSC can tolerate all classes of subsystem failure mentioned above.

A note on isolation

Elements of the operations are permitted to be visible to other updates before their encompassing transaction is completed. For example, reserving a port will require creating a serversocket binding.

Transports

The solution must run over JBoss Remoting. It is not possible to add further transport dependencies such as those required from JTS, RTS or WS-AT.

Audit

The auditing of MSC's transactions is out of scope for this discussion.

Performance

[Q] How much of an impact on performance can the solution impose? I appreciate this is probably hard/impossible to quantify.

[Q] What number of servers, present in the domain, should the solution scale to, whilst still maintaining an acceptable level of performance? I appreciate this is probably hard/impossible to quantify.

Transaction-management related requirements

Tx-logs

- The user must not see MSC's transaction logs alongside theirs when doing administration. This needs to apply to all administration mechanisms available to the user now and in the future. In particular, when using the object-store browser or CLI, only the applications' transaction logs should be visible. The same applies to the medium used to store the logs (e.g. filesystem, HQ Journal, DB).

Recovery

- Recovery of MSC's transactions must complete during some (currently unspecified) phase of the server's boot process. This is different to the applications' transactions that will be recovered after the server boots.

- It should not be possible to initiate a recovery scan of MSC's transactions via a network request.

Stats

- The agregate transaction statistics presented to the user, should be based solely on application transactions and not any initiated by MSC

[Q] Do statistics need to be available (somehow) for MSC's transactions or can they simply not be gathered?

Logging

[Q] I think a separate logger should be used for transactions initiated by MSC. Otherwise, when the user increases the log verbosity, they will start to see logs for transactions they do not recognize. This may not be a problem, as this currently happens if you have more then one application deployed; it's not clear which transaction log lines belong to which application.

Configuration

- MSC requires its own set of configuration values for the TM. This is to prevent the user from setting configuration values for their application(s) that are not compatible with the correct functionality of MSC. This applies to all those exposed via the ArjunaCore Environment Beans, not just those exposed via the WildFly management API.

The solution (provided by WildFly)

Prototype Solution

A prototype of the proposed solution is available here: https://github.com/paulrobinson/txmsc-prototype

The prototype shows how Arjuna Core can be extended to provide the functionality required for Transactional MSC. In particular it provides a Root Transaction that can enlist Subordinate Transactions that are located on a remote VM. The example also shows how crash recovery and orphan detection can be achieved. The readme.asciidoc file in the root of the project explains how to run the tests and the examples.

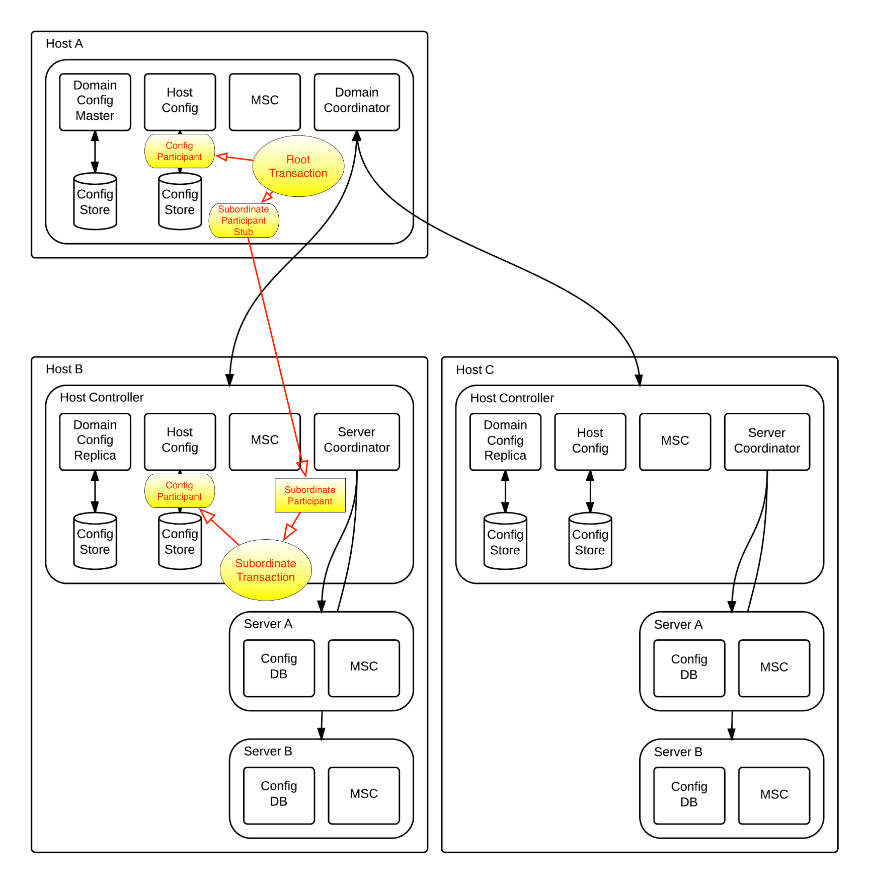

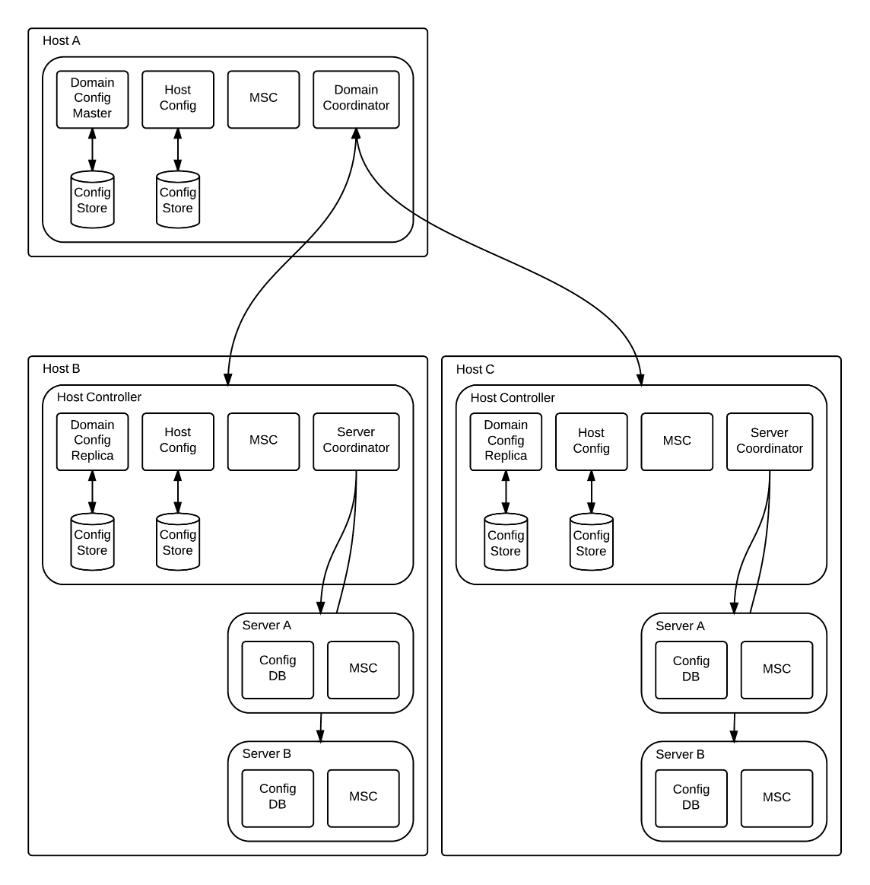

The diagram above shows how the pieces of the prototype fit in with the architecture proposed by the MSC team. The red and yellow shapes represent the pieces in the prototype. Notice that only one level of subordination is demonstrated, but it should be straight-forward to extend to the server level and to support multiple hosts.

The ellipses represent the two transaction types. The RootTransaction runs with the Domain Coordinator, and the Subordinate Transaction runs on the Host Controler(s). The rounded boxes represent participants. There is one participant for the Config Service (used to update the Config Store). This resource is just mocked-up in the prototype. In the final implementation the resource will need to be a robust implementation for managing the transactional update to the Config Store. The "Subordinate Participant Stub" is another participant that is enlisted with the Root Transaction and makes remote calls to drive the Subordinate Transaction running in the Host Controller.

The prototype only mocks-up the remote calls. The MSC team shall be responsible for implementing the remoting requirement (paying particular attention to correct handling of any new transport protocol exceptions introduced). It should be possible to apply similar techniques developed for the distributed JTA over JBoss Remoting developed for the EJB container in the WildFly application server. The following fundamental differences should be considered:

- That was an XAResource implementation rather than AbstractRecord

- The EJB container made use of a subordinate transaction technique which is out of scope of this solution.

The object-store is not shown on the diagram. However, all participants and transactions are recoverable and state is persisted to the object-store, local to the VM in which they are ran.

Recovery

Recovery (not shown on the diagram yet) is driven from the "domain controller'. A recovery module (RootTransactionRecoveryModule.java) periodically scans for failed transactions. On finding one, the "Root Transaction", "Config Participant" and "Subordinate Transaction Stub" are restored from the log. When recreating the "Subordinate Transaction Stub", it connects the associated Host Controller and requests that it restores the associated Subordinate Transaction from the log. The second phase of the transaction is then replayed.

As with the previously mentioned WildFly EJB container implementation, the resource manager (in this case the MSC container) is responsible for re-establishing remote connections to domain members required during recovery and correctly converting any transport protocol exceptions to ArjunaCore expected exceptions. It is known that some work (such as reserving socket binding) would be associated with an abstract record and in the case that the transaction rolls back these resources can be released. As mentioned previously these operations have no isolation guarantees.

Orphan Detection

It is possible that a failure can occur at such a time that the Subordinate Transaction is logged without the associated Root Transaction being logged. This occurs if the failure occurs after the Subordionate Transaction prepares (and thus writes its log), but before the Root Transaction prepares (thus does not write its log). This causes an orphan transaction to be present on the Host Controller. As Orphans relate to a Root Transaction that never committed, they can simply be rolled back. Detection of orphan Subordinate Transactions is driven by the Domain Controller. Once the recovery of known Root Transactions is completed successfully, it is known that any other Subordinate Transactions, started by that Domain Controller, can be rolled back. This is driven by the Domain Controller who makes a remote call to the Host Controller requesting that it rollback all transactions (not in-flight) started by that Host Controller. Note it is important to distinguish between transactions that are in-flight for a failed Root Transaction and those in-flight for a new Root Transaction that was started since recovery completed. The prototype doesn't currently make this distinction.

Sequence Diagram (NEEDS UPDATING)

The following diagram shows the sequence of messages that would occur with this solution. In particular it is simplified to just two levels (IIRC there is a third level, but I don't recall the details). The first 'level' contains the root MSC coordinator (Actors: Client, MSC, AtomicAction, ServiceProxy, ConfigProxy) and the second 'level' contains all the servers in the domain requiring the update (Actors: Service, Config). The diagram also omits any interposition techniques. This could be done to reduce the number of messages sent between the MSC coordinator and each server.

Changes required in Naryana

Our current understanding is that all the changes in Narayana are focused around isolating MSC's usage of ArjunaCore from the applications' and users' usage. Therefore it should be possible to prototype transactional MSC with the latest Narayana 5.x release.

Proposed short-term solution

WildFly provides two instances of Narayana, each in its own classloader:

Application Instance

The first instance is provided for applications deployed to Wildfly and appears to be identical to the current offering available in WildFly today (from the user and applications' point of view).

MSC Instance

The second instance of Narayana is provided in a different classloader and has the following attributes:

- It only has ArjunaCore available.

- It has it's own instance of the ObjectStore, Recovery Manager and Transaction Reaper.

- It gathers its own statistics or no statistics.

- The recovery manager cannot be driven over the network.

- This instance cannot be accessed via WildFly management.

- Its statistics are not available when viewing the application-transactions' statistics.

- It uses a different logger category (or some other mechanism) to distinguish it's log entries from the first Narayana instance's. This also allows different log-levels to be set.

Proposed long-term solution

Update Narayana to support isolation of applications. Each application can have its own configuration and will see an isolated view of statistics. The current view is that this is possible to achieve but will be a lot of work. We plan to revisit this solution once we are further down the path with the Transactional MSC implementation.